Reaper includes some tools for managing loudness and peaks. Let's look at them and what they do.

Background.

Mastering is the process of preparing completed songs for distribution. In particular, adjusting the overall EQ and managing the loudness and peaks. For digital distribution, you generally want to target a specific loudness such as -14 LUFS, and keep peaks at -1dB.

Hard clipping happens when the peaks of a loud signal are clamped. There is a sharp bend in the waveform which introduces aliasing and harsh distortion. For content such as sin waves, piano, and voice, this distortion will be very audible. For thick and dirty pop and rock, hard clipping might not be noticed or might yield a pleasing result.

Soft clipping is any method that squishes the peaks down as they approach / exceed the 0dB limit. Soft clipping avoids the sharp bend and harsh distortion of hard clipping, but does affect the sound. There might be some subtle harmonic distortion, or you might notice some of the sharpness of the mix is reduced.

When mastering, it is common that after using gain to achieve the desired loudness, there will be peaks above -1db that need to be clipped, using hard and/or soft clipping (among the many other methods in the DAW toolkit). Some of these plugins include controls for loudness (gain into the clipping) and peak ceiling (gain after clipping). These are generally the same as you would get by adding gain plugins before and after. The only thing that ultimately matters is the final output: is it loud enough, are the peaks less than -1db, and is it free of undesired audible artifacts.

Setup

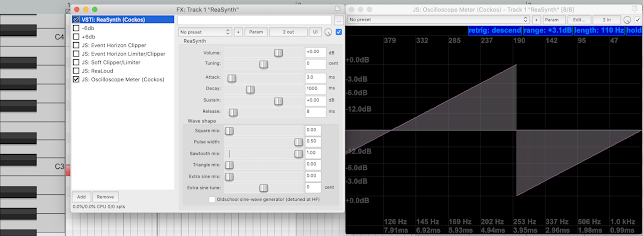

Note at C3 into ReaSynth triangle wav at 0db into an oscilliscope. Shows mapping of input to output level.

Event Horizon Clipper

"Threshold" boosts gain and introduces hard clipping

"Ceiling" is just a volume duck applied after the hard clipping

"Soft Clip" is a buggy mess that introduces additional unnecessary discontinuities.

Never put this in your mastering chain!

Event Horizon Limiter/Clipper

"Threshold" boosts gain and introduces hard clipping where peaks hit 0db

"Ceiling" is a gain attenuation applied after the hard clipping

"Release" has no visible effect, even at relevant time scales

"Ceiling" by itself is just a gain attenuation. Moving "Threshold" down loudens at the expense of hard clipping. Hard clipping will be very audible on many types of content, and there are probably better ways to louden. Might be useful at "Threshold -1, Ceiling -1" as a last line of defense to guarantee no peaks above -1dB [common advice to avoid problems that digital music services might have when encoding a file that hits 0db]. But ideally you want to have your peaks under control before hitting this plugin... in which case you wouldn't need it at all. If you notice that the input meter recorded a peak above -1dB, then clipping happened somewhere, and would be audible on a sin wave. If you see an input peak of 6dB then this plugin probably introduced aliasing artifacts.

JS Soft Clipper / Limiter

Bends the tops of peaks so they don't stick as far up. Imposes a hardclipping limit on the output signal. Below -6dB the response is linear, so it will only impact the louder parts of the peaks.

"Boost" boosts gain going into the curve, increasing loudness, pushing peaks further into the bend, and possible introducing/increasing hard clipping.

"Output Brickwall" moves the hardclipping threshold down, without decreasing loudness. This makes the bend more extreme, and makes it more likely your peaks hit the hardclipping threshold. e.g. my peaks are perfectly 0dB, I pull the brickwall down to -1dB, now the top 1dB of my peaks are hardclipped.

With controls at 0, If the input peak is 0dB, the output peak will be bent down to -1.9dB giving you more headroom. If the input peak is 0dB, I can use Boost to add 4dB of fairly transparent loudness which brings the output peaks back up to 0dB again. At this point any further boost, or pulling down the brickwall, or higher input peaks will introduce hardclipping.

This plugin is a nice option because it allows some loudening before hardclipping. If the input peaks are not under control, hardclipping will be introduced.

JS ReaLoud

Implements a curve map which soft clips, guaranteeing output peaks never exceed 0db, and adds 3dB of loudness in the process. The curve is linear until around -3dB, so only the highest peaks are modified.

"Mix" fades the effect in. At 0% the signal is untouched, at 50% there will be 1.5dB of loudness added. Note that the 0dB clipping is always applied, and the lower the mix value the harder the clipping (the sharper the angle).

There is no free lunch; the bending of the curve on peaks will color the sound somewhat. And it kicks in pretty hard at -3dB (compared to the -6dB of the soft clipping part of JS Soft Clipper) But even though extreme peaks will end up completely flat (which is not a natural place for the speaker cone to sit), the curve bends smoothly into flat, so it doesn't introduce sharp aliasing artifacts the way that hardclipping does.

The 3dB of loudness gain is not only arbitrary but almost besides the point. This plugin gives you a nice soft clipping to tame your peaks. Put a -3dB gain in front of it, and you cancel out the loudness boost. If you need more loudness, add a linear gain in front of it and drive it do desired loudness.

There is also a version with a lowpass filter.

Recommended. Set mix to 100% to avoid hardclipping. Follow with a -1dB attenuation to avoid 0dB peaks in the output file. Put a pre-gain in front of it to adjust the desired loudness. Start with -3dB to avoid driving the curve. Measure the LUFs of the output file, and increase the pre-gain as much as necessary to hit -14LUFs. Always double-check with your ears.

JS Louderizer

"Mix" fades the effect in.

"Drive" adjusts the curve.

Mix with no Drive does nothing, including no clipping.

Drive with no Mix does nothing, including no clipping.

Drive 100% and slowly bring the mix in, it bends the curve.

Drive 100 Mix 100 looks the same as ReaLoad for inputs less than 0db, but overdriving it produces a negative response!

Strictly inferior to ReaLoud and rather useless for mastering.